Formation LLM en tant que service

Assistance d'experts pour aider les organisations à former ou à affiner en toute sécurité de grands modèles d'IA à l'aide de puissantes ressources de supercalculateurs dédiés.

Il s'agit d'un service à forte valeur ajoutée, basé sur un projet, qui comprend la consultation d'experts, l'intégration de données sécurisées, la gestion de l'exécution de la formation et la livraison d'un modèle final.

Adapté aux organisations qui forment des modèles de base à partir de zéro ou qui effectuent des réglages à grande échelle sur des données propriétaires sécurisées, ce produit implique la réservation temporaire et dédiée d'une partie importante du supercalculateur (par exemple, 128, 256, 512 ou 1024 GPU) pour un seul travail de formation.

Un engagement de haut niveau

La formation LLM en tant que service offre une expérience haut de gamme entièrement gérée, conçue pour les organisations qui ont besoin de précision et de fiabilité. Ce service comprend

- Consultation stratégique avec des experts du domaine pour définir les objectifs, sélectionner les architectures et optimiser les flux de travail.

- L'intégration sécurisée et conforme des données, garantissant le cryptage, la confidentialité et le respect des normes réglementaires.

- Orchestration de la formation de bout en bout, de l'allocation des ressources à la surveillance et au dépannage pendant l'exécution.

- Validation et livraison d'un modèle complet, emballé pour le déploiement avec des repères de performance et de la documentation.

Infrastructure dédiée

Votre projet bénéficie d'un accès exclusif à un environnement de calcul de haute performance :

- Réservation temporaire de 128 à 1024 GPU sur une grappe de superordinateurs.

- Isolation garantie pour un débit maximal, zéro interférence et des performances prévisibles.

- Accès à des interconnexions à haut débit et à un stockage optimisé pour les ensembles de données à grande échelle.

Modèle de provisionnement

- Allocation basée sur des projets, adaptée à des objectifs de formation et à des calendriers spécifiques.

- Pas d'architecture partagée ou multi-locataire, les ressources étant entièrement dédiées à votre charge de travail pour la durée de l'engagement.

Cas d'utilisation

- Idéal pour le développement de modèles de fondations personnalisés, où l'échelle et le contrôle sont essentiels.

- Parfait pour les LLM profondément localisés, tels que les modèles de langage africains dans les systèmes d'IA spécifiques à un domaine qui nécessitent un calcul massif et la supervision d'un expert.

Métrique de base

Prix forfaitaire par GPU-heure pour l'ensemble du cluster réservé et les capacités supplémentaires telles que le stockage, le réseau à grande vitesse entre le centre de données du client et l'usine d'IA.

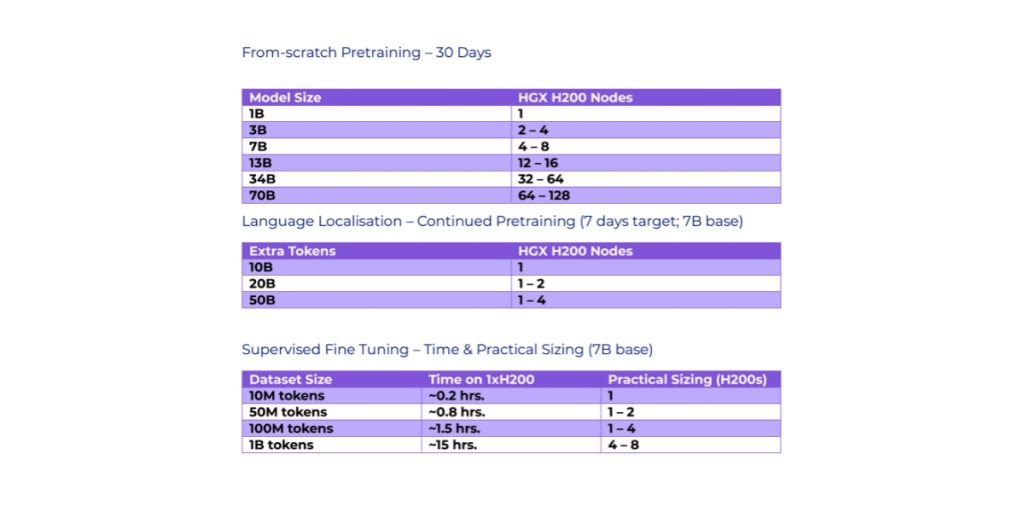

Illustration de la configuration requise pour le HGX H200 pour la formation LLM et la localisation (à confirmer avec NVIDIA)

Formation de modèles de vision par ordinateur en tant que service

CVMTaaS est une offre spécialisée, basée sur des projets, conçue pour les organisations qui construisent ou affinent des modèles de vision par ordinateur en utilisant des données d'image propriétaires. Ce service prend en charge à la fois l'entraînement des modèles fondamentaux et l'adaptation spécifique à un domaine pour des tâches telles que la détection d'objets, la segmentation, la classification et la recherche visuelle.

Sécurisation de l'accès aux données

Prend en charge les ensembles de données visuelles sensibles avec des contrôles de confidentialité et de conformité.

Consultation d'experts

Réserver temporairement une grande partie d'un supercalculateur (par exemple, 128-1024 GPU) pour une seule tâche de formation.

Gestion de l'exécution de la formation

Exploite des grappes de GPU dédiées et optimisées pour les charges de travail de vision (par exemple, 128-1024 GPU).

Modèle de livraison

Les modèles finaux sont livrés avec des repères de performance et des formats prêts à être déployés.