AIFaaS

AI Factory as a Service

Give your business the tools and frameworks it needs to build, train, and deploy AI on a fully managed, secure, multi-tenant GPU layer.

Fully managed, enterprise

AI workflow

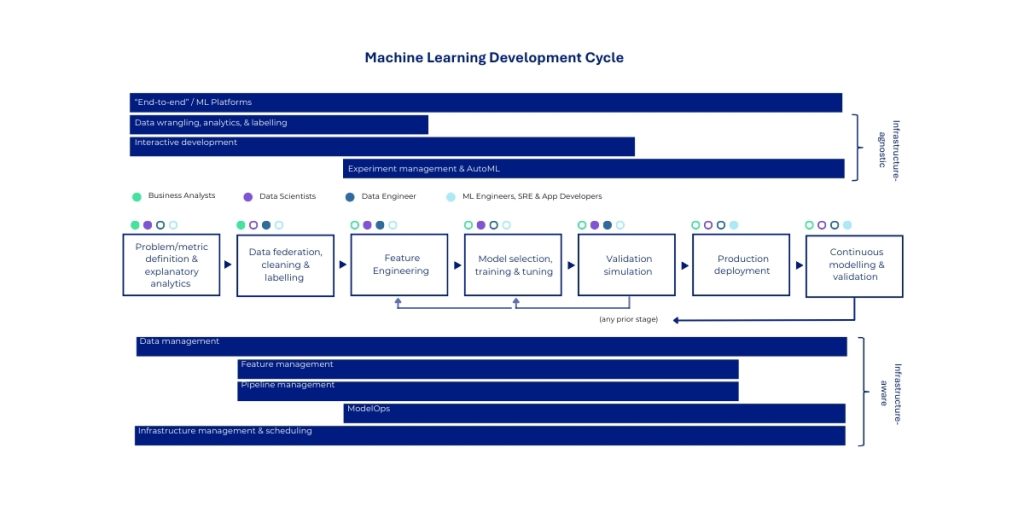

AIFaaS is our premier, high-value platform offering that provides a fully managed, end-to-end environment for the entire enterprise AI workflow. Built securely on our multi-tenant GPUaaS layer, this service is a commercial implementation of the complete NVIDIA AI Enterprise software suite, delivered as a private, isolated “workbench” for an organisation’s data science and ML engineering teams. It abstracts away the immense complexity of building and maintaining a production-grade AI platform, allowing teams to focus on delivering business value.

The service provides managed access to a comprehensive set of NVIDIA’s industry-leading AI frameworks. This includes the NVIDIA NeMo framework, which offers powerful tools for model customisation techniques such as fine-tuning and Retrieval-Augmented Generation (RAG), enabling clients to securely adapt state-of-the-art foundation models with their own proprietary data.

NVIDIA NIM

NVIDIA NIM™ offers containerised solutions for deploying GPU-accelerated AI inference microservices. These microservices work with both pre-trained and custom AI models, providing standard APIs that integrate seamlessly into your existing applications, frameworks, and workflows. Each microservice encapsulates a specific model (such as a language translation model or a text summarisation model) and is fine-tuned by NVIDIA to extract maximum inference performance from the H200 GPU architecture. This optimisation results in the lowest possible latency and highest throughput, which is critical for real-time, user-facing applications.

Enterprise Search

Deploy embedding NIMs for semantic document search

Customer Support

LLM NIMs powering intelligent chatbots

Content Creation

Image generation NIMs for marketing teams

Healthcare

Medical imaging analysis with vision NIMs

Financial Services

Document processing and analysis

NVIDIA NeMo

NVIDIA NeMo™ is an enterprise-grade platform designed to manage the complete lifecycle of AI agents through modular, interconnected components. NeMo delivers microservices and tools for curating data, customizing and evaluating models, implementing safety controls, and monitoring performance.

NeMo microservices include:

- NeMo Curator

- NeMo Customiser

- NeMo Evaluator

- NeMo Retriever

- NeMo Guardrails

- NeMo Agent Toolkit

Banking

A banking chatbot uses NeMo Guardrails to prevent discussions about confidential investment data, block attempts to extract customer data, and ensure financial information provided includes proper disclaimers.

Training

A training platform takes a GPT base model and fine-tunes it on 50,000 historical customer interactions to create an AI agent that handles returns, complaints, and product questions following company policies.

NVIDIA AI Applications SDKs

NVIDIA AI Applications SDKs are domain-specific, end-to-end development platforms that bundle the entire AI stack (libraries, frameworks, pre-trained models, tools, and workflows) into comprehensive toolkits designed for specific industries and use cases.

Think of them as complete solution packages rather than individual building blocks. While NIM and Microservices are like LEGO bricks you assemble, the Applications SDKs are more like pre-designed LEGO sets with instructions for building specific things (a castle, a spaceship, etc.

Example: NVIDIA Riva is A GPU-accelerated SDK for building and deploying fully customizable, real-time conversational AI applications. This includes automatic speech recognition (ASR), text-to-speech (TTS), and neural machine translation (NMT).

Kubernetes

For scalable orchestration of AI workloads across GPU clusters, enabling multi-tenant resource allocation and automated scaling.

MLOps and LLMOps Tooling

Managed pipelines for model training, fine-tuning, deployment, and monitoring with observability, governance, and compliance baked in.